An introduction to distributed systems for web developers

Table Of Contents

The goal of this article is to introduce necessary concepts a web developer should know in order to better understand distributed systems.

The content of this article is heavily inspired by the book System Design Interview – An insider’s guide.

Servers

We often hear about servers but what exactly are they and what role do they play in systems design?

Servers are computers placed in a data center that perform some action for our applications.

In a web application, there is at least one server that runs a web server process like Apache or Nginx that makes web content available through ports 80 or 443.

The fact that a server runs a web server process makes it a web server.

However, the same server could also run a database process which exposes a database from another port, for example, MongoDB on port 27017. This would make the same server also a database server.

In a development environment, we often run the web application we are developing entirely on our machine. Our personal computer becomes a server responsible for serving frontend, backend and the database.

This system is called monolithic or centralized, the opposite of a distributed system.

On the other hand, when a system is distributed over many servers it is called a distributed system. This brings us to a ChatGPT definition of a distributed system:

A distributed system is a network of interconnected computers that work together to achieve a common goal by sharing resources, communicating, and coordinating their actions, often spanning multiple geographic locations.

Factors that affect software systems

When we are building a system such as a web application, we should be worried about the following factors:

- Latency (delay)

- Availability

- Scalability

Latency refers to how fast the application is. Low latency means small delay thus fast speed.

Availability refers to how the web application handles disruptions, for example a power outage in a data center where our server is hosted. Availability is related with the concept of single point of failure which occurs when there is a component that if it fails, it will break the whole system.

Scalability refers to how the system manages increase in usage like a peak in traffic and growth in usage over time.

These are the problems distributed systems solve. Distributing the system, makes the system faster and more reliable.

Solutions to monolithic problems

In order to solve the problems mentioned above, we can employ the following strategies.

- Horizontal scaling

- Data replication

- Load balancing

- Caching

- Message queues

Horizontal scaling

Horizontal scaling refers to the process of adding more servers to the system.

This is the opposite of vertical scaling, which is the process of increasing the capacity of the current servers, an act which is usually undesirable because it’s not cost effective and because computers have limited capacity in general.

By adding more servers you are able to address all three concerns because:

- If one server fails, another server can take over the workload, thus addressing the availability concern.

- If you you are experiencing a lot of traffic, adding another server to handle requests, increases the processing power of you system, thus addressing the latency and scalability concern.

- Adding a server closer to the geographic location of your users improves latency due to the decrease of physical distance.

Horizontal scaling can involve both web servers and database servers.

When it involves database servers, it’s called sharding.

Data replication

Data replication refers to the act of replicating or creating multiple copies of your data. This can be beneficial because it improves availability and addresses the single point of failure concern.

Data replication is usually achieved by following the master/slave model where a master database is responsible for write operations and slaves are responsible for read operations.

Load balancer

A load balancer is crucial in a distributed system because it directs traffic to one of multiple servers preventing the overload of a specific server.

A load balancer may be the entry point to a data center, thereafter directing requests to servers marked with private IP addresses. Or it might be a global load balancer directing requests to servers located in different networks.

Caching

Caching is an important technique in any system and makes data retrieval faster by storing data either in faster memory of a computer (RAM) or to a closer a geographic location to the client (CDNs or HTTP).

It’s therefore an important tool to address the latency concern.

Content delivery network (CDN)

Content delivery networks provided edge servers which store primarily static content and are able to deliver that content to clients located close to them.

A content delivery networks are a form of caching strategy since they store static content requested by users and are able to deliver that content to other users on subsequent requests.

Hence CDNs address the latency concern.

Message queues

Message queues are data structures that live in memory and are used to complete asynchronous tasks by decoupling the components involved in the system.

They use the the provider/subscriber model where a provider publishes a job in the queue and the subscriber picks up the job when it’s ready to process it.

This allows the provider and subscriber to communicate with each other even when one of them is not available and allows separate scaling of these two system components.

An example of this would be order placement in an e-commerce. A client places an order which is sent to a queue ready to be picked up by the order fulfillment service. This decouples the order placement from the order fulfillment.

Bringing it all together

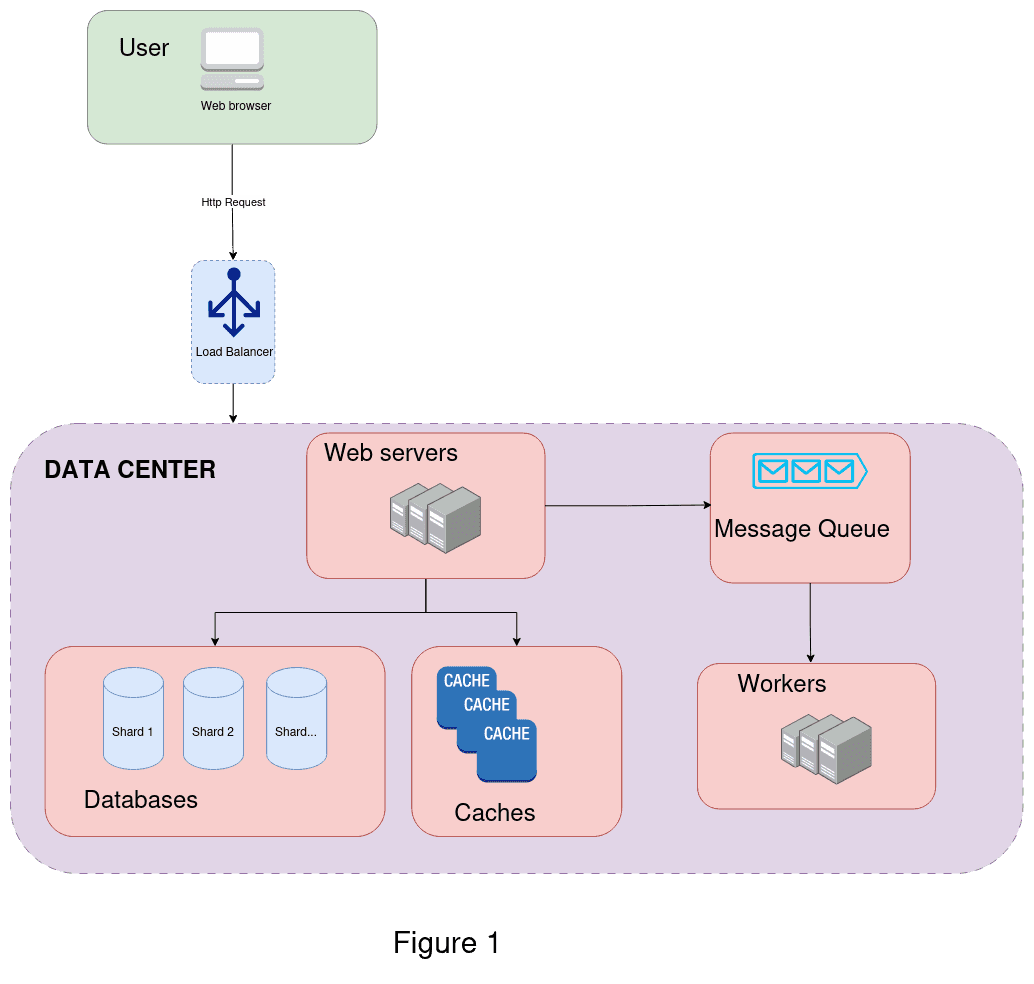

Figure 1 demonstrates an example of a web application system with the components mentioned above.

- A user makes an http request from their browser.

- The request is forwarded to the load balancer of the data center where the servers are hosted.

- The load balancer forwards the request to a web server.

- The web server tries to access data from the caches pool otherwise:

- It accesses the databases pool.

- To handle the processing of the request, the web server posts a job on the message queue.

- The job is picked up by a worker server, when one becomes available.